Meta release Segment Anything 2: the most powerful video and image segmentation model, LangChain launches the AI Agent IDE, and more!

Hey friends 👋,

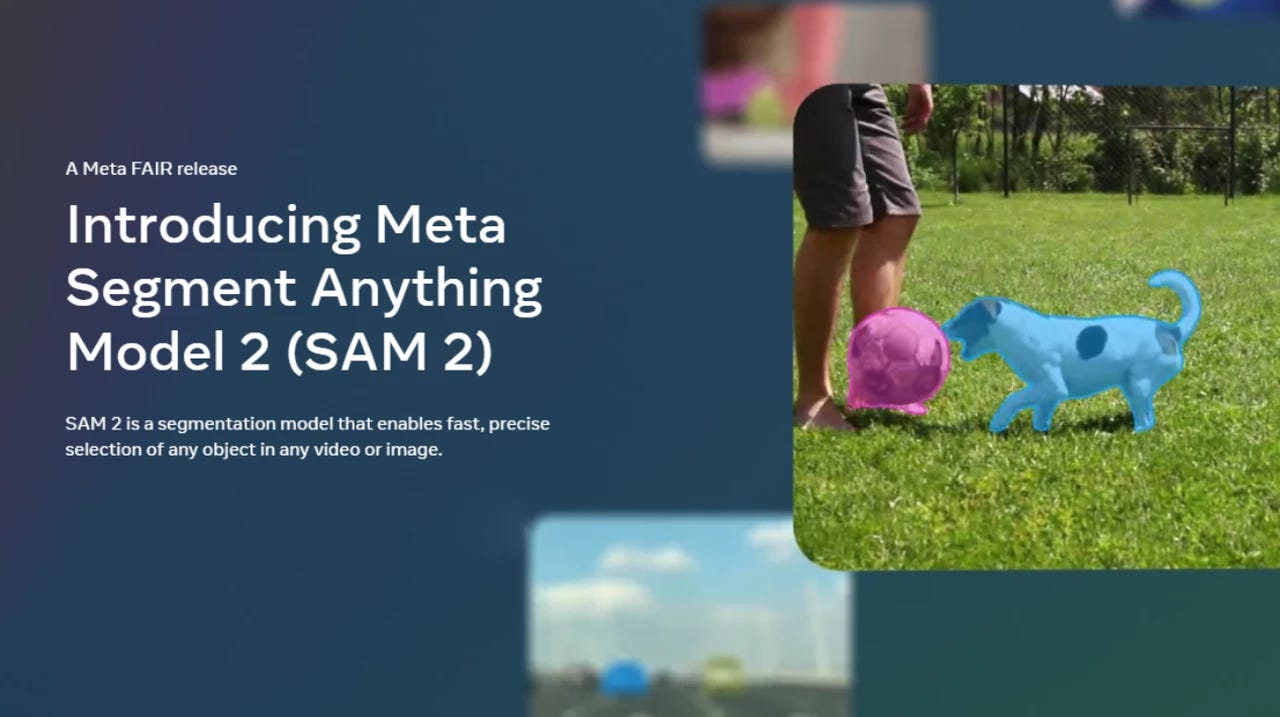

If you read last week’s issue, you probably know that I am a major fan of Meta’s move towards Open Source AI. In this issue, I want to talk about another step Meta is taking in advancing the AI industry with the launch of their brand new Segment Anything Model 2 (SAM 2).

What is SAM 2 and Why Does It Matter?

SAM 2 is a unified model for real-time object segmentation in images and videos. The best part? It’s COMPLETELY OPEN SOURCE!

Core Innovations and Functionality

SAM 2 enables promptable segmentation, allowing users to select objects in videos and images using clicks, boxes, or masks. The model can segment any object, even in previously unseen visual domains, thanks to a memory mechanism that stores information about objects and user interactions. This mechanism includes a memory encoder, memory bank, and memory attention module.

To train SAM 2, Meta AI researchers collected the largest video segmentation dataset to date. As all machine learning practitioners know, good data (and a lot of it!) is key to an amazing model.

Performance and Efficiency

SAM 2 demonstrates significant performance improvements:

Operates at 44 frames per second for video segmentation.

Requires three times fewer interactions for video segmentation compared to previous models.

Provides an 8.4 times speed improvement in video annotation over manual methods.

Achieves higher accuracy in image segmentation than its predecessor, SAM, and is six times faster.

SA-V Dataset

Meta also released the SA-V dataset, which includes 51,000 videos and over 600,000 masklets. This dataset is 4.5 times larger than the previous largest video segmentation dataset, supporting SAM 2 in delivering faster and more efficient video annotation.

Technical Specifications and Access

Developers can access SAM 2 through a web-based demo and use the released code and model weights under an Apache 2.0 license. The provided tools and datasets facilitate extensive research and development in computer vision.

Key Features

Zero-shot generalization: Segments objects in unseen visual domains without custom adaptation.

Memory mechanism: Stores and recalls past segmentation data for continuous tracking across frames.

Real-time processing: Enables efficient, interactive applications with streaming inference.

Extensive dataset: The SA-V dataset enhances training with diverse, real-world video scenarios.

High accuracy: Outperforms existing models in both image and video segmentation benchmarks.

If you know me, you probably know I got into the machine learning field by working on computer vision projects. Specifically, I spent countless hours on Medical Deep Learning projects. To this day, I still believe Computer Vision will be one of the most important fields impacted by AI, especially in the healthcare sector.

One of the most important tasks in Medical AI is segmentation. The ability of SAM 2 to perform real-time, accurate segmentation with minimal user input is a huge leap forward. This technology can significantly enhance medical imaging applications, like segmenting tumors in real-time during surgeries.

In my opinion, this advancement in computer vision is as important as the mainstream adoption of transformers in the LLM space (Yes, I know SAM 2 also uses transformers).

You can learn more about SAM 2 here and here.

LangChain launches LangGraph Studio

LangChain has released LangGraph Studio, a specialized IDE for developing and debugging AI agents. Key features include:

Visual Workflow Management: Provides a graphical interface for designing and interacting with agent workflows, simplifying debugging and optimization.

Advanced Debugging Tools: Allows setting breakpoints, editing states, and resuming workflows, enabling detailed inspection and modification of agent behavior.

Seamless Integration with LangGraph Cloud: Supports scalable deployment and management of agents, handling task distribution, persistence, and performance tracking.

LangGraph Studio enhances the development process, offering a robust environment for creating reliable and scalable AI agents.

Even with my limited exploration of the software over the past two days, I am already in love. Everything from the simple tutorial LangChain provides, to some of my more complex agentic workflow plans runs beautifully.

You have reached the end of this issue of the Saturday Press. This was a bit of a shorter issue, I am still experimenting with the style of writing. Please share your feedback about the things you like, the things you really like, and the things you don’t.

Until next time, stay curious and keep exploring!

Mohamed